About

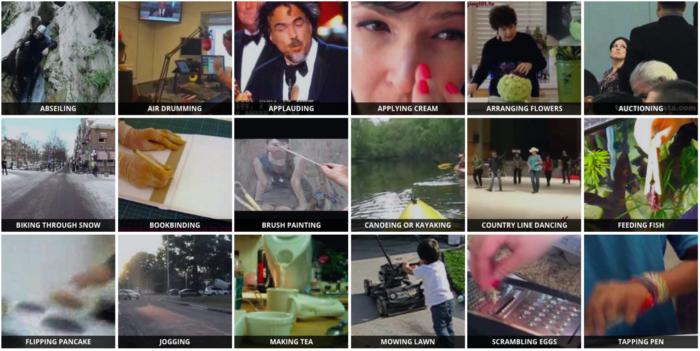

Human action recognition with spatio-temporal 3D CNNs.

Run ResNets on videos for action recognition.

🚀 Use with Ikomia API

1. Install Ikomia API

We strongly recommend using a virtual environment. If you're not sure where to start, we offer a tutorial here.

2. Create your workflow

☀️ Use with Ikomia Studio

Ikomia Studio offers a friendly UI with the same features as the API.

-

If you haven't started using Ikomia Studio yet, download and install it from this page.

-

For additional guidance on getting started with Ikomia Studio, check out this blog post.

📝 Set algorithm parameters

-

model_name (str) - default 'resnet-18-kinetics': Name of the pre-trained model. Additional ResNet size are available:

- resnet-34-kinetics

- resnet-50-kinetics

- resnet-101-kinetics

- resnext-101-kinetics.onnx

- wideresnet-50-kinetics.onnx

-

rolling (bool) - default 'True': Number of frame passed has input.

-

sample_duration (int) - default '16': Number of frame passed as input.

If rolling frame prediction is used, we perform N classifications, one for each frame (once the deque data structure is filled, of course) If rolling frame prediction is not used, we only have to perform N / SAMPLE_DURATION classifications, thus reducing the amount of time it takes to process a video stream significantly.

Parameters should be in strings format when added to the dictionary.

Developer

Ikomia

License

MIT License

A short and simple permissive license with conditions only requiring preservation of copyright and license notices. Licensed works, modifications, and larger works may be distributed under different terms and without source code.

| Permissions | Conditions | Limitations |

|---|---|---|

Commercial use | License and copyright notice | Liability |

Modification | Warranty | |

Distribution | ||

Private use |

This is not legal advice: this description is for informational purposes only and does not constitute the license itself. Provided by choosealicense.com.