About

Run florence 2 object detection with or without text prompt

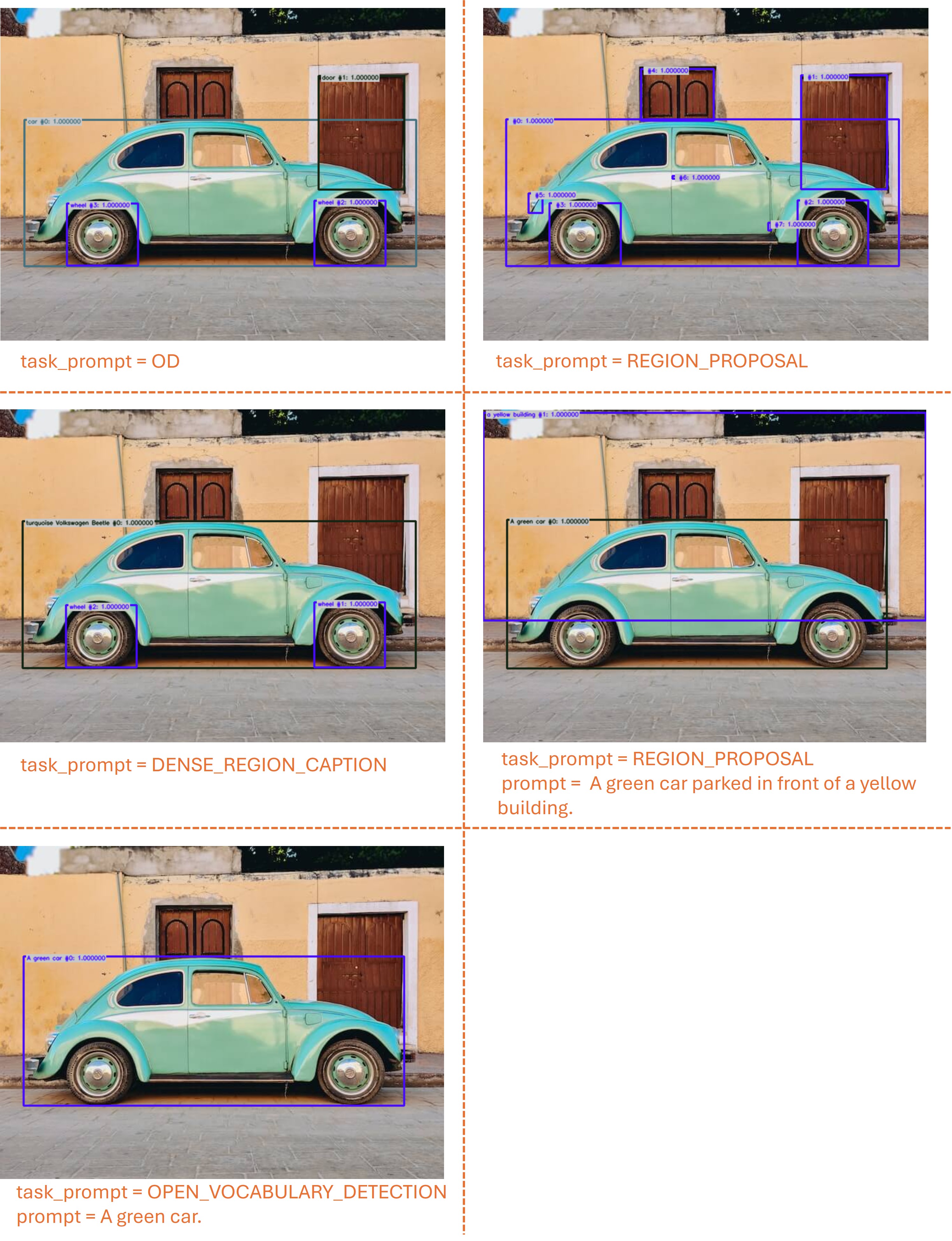

Florence-2 is an advanced vision foundation model that uses a prompt-based approach to handle a wide range of vision and vision-language tasks. With this algorithm you can leverage Florence-2 for object detection across various tasks, using different options for prompts:

🚀 Use with Ikomia API

1. Install Ikomia API

We strongly recommend using a virtual environment. If you're not sure where to start, we offer a tutorial here.

2. Create your workflow

☀️ Use with Ikomia Studio

Ikomia Studio offers a friendly UI with the same features as the API.

- If you haven't started using Ikomia Studio yet, download and install it from this page.

- For additional guidance on getting started with Ikomia Studio, check out this blog post.

📝 Set algorithm parameters

- model_name (str) - default 'microsoft/Florence-2-base': Name of the Florence-2 pre-trained model. Other models available:

- microsoft/Florence-2-large

- microsoft/Florence-2-base-ft

- microsoft/Florence-2-large-ft

- task_prompt (str) - default 'OD': Type of the object detection task. List of the task available:

- OD

- DENSE_REGION_CAPTION

- REGION_PROPOSAL

- CAPTION_TO_PHRASE_GROUNDING ; requires prompt input

- OPEN_VOCABULARY_DETECTION ; requires prompt input - open vocabulary detection can detect both objects and ocr texts.

- prompt (str): Text input to guide the object detection task. Compatible only with the tasks CAPTION_TO_PHRASE_GROUNDING & OPEN_VOCABULARY_DETECTION.

- num_beams (int) - default '3': By specifying a number of beams higher than 1, you are effectively switching from greedy search to beam search. This strategy evaluates several hypotheses at each time step and eventually chooses the hypothesis that has the overall highest probability for the entire sequence. This has the advantage of identifying high-probability sequences that start with a lower probability initial tokens and would’ve been ignored by the greedy search.

- do_sample (bool) - default 'False': If set to True, this parameter enables decoding strategies such as multinomial sampling, beam-search multinomial sampling, Top-K sampling and Top-p sampling. All these strategies select the next token from the probability distribution over the entire vocabulary with various strategy-specific adjustments.

- early_stopping (bool) - default 'False': Controls the stopping condition for beam-based methods, like beam-search. It accepts the following values: True, where the generation stops as soon as there are num_beams complete candidates; False, where an heuristic is applied and the generation stops when is it very unlikely to find better candidates; "never", where the beam search procedure only stops when there cannot be better candidates (canonical beam search algorithm).

- cuda (bool): If True, CUDA-based inference (GPU). If False, run on CPU. Optionally, you can load a custom model:

Parameters should be in strings format when added to the dictionary.

🔍 Explore algorithm outputs

Every algorithm produces specific outputs, yet they can be explored them the same way using the Ikomia API. For a more in-depth understanding of managing algorithm outputs, please refer to the documentation.

Developer

Ikomia

License

MIT License

A short and simple permissive license with conditions only requiring preservation of copyright and license notices. Licensed works, modifications, and larger works may be distributed under different terms and without source code.

| Permissions | Conditions | Limitations |

|---|---|---|

Commercial use | License and copyright notice | Liability |

Modification | Warranty | |

Distribution | ||

Private use |

This is not legal advice: this description is for informational purposes only and does not constitute the license itself. Provided by choosealicense.com.